Have you noticed that ranking #1 on Google doesn’t guarantee visibility in LLMs?

That’s because AI search engines such as ChatGPT, Perplexity, and Google’s AI Mode are fundamentally changing how users find information and how search results are generated.

Welcome to the era of query fan-out, where a single search query triggers dozens of sub-searches behind the scenes. If you’re not optimizing for this, your content might go unnoticed by the LLMs.

In this guide, you’ll learn the exact meaning of query fan-out, why it matters for SEO, and how to optimize your content to appear in AI-generated answers.

Let’s dive in.

How AI Search Algorithms (LLMs) Work: The Big Picture

Before exploring query fan-out specifically, it’s important to understand the complete process of how AI search engines generate answers.

When you submit a query to ChatGPT, Perplexity, or Google’s AI Mode, the system goes through several sophisticated steps:

Step 1: Query Understanding & Intent Detection

The AI analyzes your query to understand what you’re really asking for, whether you need a comparison, a list of options, step-by-step instructions, or general information. The system also classifies the query type (short_fact, reason, comparison, etc.) to determine the answer format and which models to invoke.

Step 2: Query Fan-Out Process

For complex or multifaceted queries, the system may break the input into multiple related subqueries through a process called query fan-out. Using LLM-powered prompt expansion, it generates dozens of synthetic sub-queries across different types to ensure a thorough answer.

Step 3: RAG (Retrieval-Augmented Generation)

This is where AI search engines fundamentally differ from traditional chatbots.

Traditional LLMs (such as base ChatGPT without search) rely solely on their training data, which has a cutoff date for its knowledge. They cannot access current information or specific websites.

AI Search Engines use RAG to access the live web in real-time. For each query generated during the query fan-out process, the system retrieves fresh content from external sources via partner search APIs, unless the information is already within the model’s internal knowledge base.

For each sub-query, the AI search engine retrieves content from external websites and processes it as follows:

- Chunking: Divides documents into smaller, semantically coherent passages.

- Embedding: Transforms each passage into a high-dimensional vector representation.

- Dense Retrieval: Utilizes vector embeddings to perform similarity searches, identifying the most relevant passages.

- Custom Corpus Construction: Assembles an initial pool of candidate sources to inform the response.

Step 4: Ranking & Reranking

This is the critical filtering stage where the AI decides which sources to actually use:

- Reciprocal Rank Fusion (RRF): Boosts sources that appear across multiple sub-queries for consistency

- Neural Reranking: Advanced LLM models perform pairwise comparison – comparing passages head-to-head to determine which is more relevant

- Quality Signals: Factors like freshness, authority, intent alignment, domain credibility, and passage-level answerability

- Diversity Constraint: Ensures multiple perspectives, not just one source

- Reasoning Chain Evaluation: Passages must support logical steps in the AI’s reasoning process

Step 5: Reasoning & Answer Synthesis

The AI uses reasoning chains – structured sequences of logical inferences – to construct the answer:

- Claim Planning: Identifies key points to address based on reasoning steps

- Grounding: Anchors each claim to retrieved passages

- Conflict Resolution: Handles discrepancies between sources, preferring fresher and more authoritative content

- Model Selection: Chooses specialized LLMs for specific tasks (summarization, comparison, translation, etc.)

Step 6: Citation & Response Generation

Finally, the system generates the final response:

- Selective Citation: Attaches sources to specific claims that directly support reasoning steps (not all retrieved documents are cited)

- Multimodal Integration: May incorporate text, images, video, audio, and structured data

- Personalization Layer: User embeddings influence which format and content is shown based on your search history, location, and preferences

What is Query Fan Out?

Query-fan-out is an approach used by AI search engines to break down complex queries into more sub-related queries, then the system takes appropriate information from each result and creates a unique response for the end user.

.webp.webp)

For example, if someone types in ChatGPT “payment gateway for SaaS“, the system will individually, in real time, search for smaller sub-related queries such as:

- “best payment gateway for SaaS 2025”

- “best rated payment gateway for SaaS”

- “payment gateway fees comparison”

- “SaaS payment processing options”

- “subscription payment platform features”

- “Stripe vs Braintree for SaaS”

Then, it will search for each query, select the most relevant results for each, and combine them into a single result that best serves the end user.

Why Do LLMs Use Query Fan-Out?

Searching and returning results from additional sub-related queries will yield a more comprehensive answer that does not encourage the user to search again.

Based on that, LLMs are, in one sense, trying to predict the user’s next search query.

Types of Query-Fan-Out Sub-queries

Rather than focusing on just the main type of the query, LLMs search for other types of sub-queries to cover the answer better.

Reformulation query: This type involves rephrasing the main query in different ways to capture related results.

Example: If the main query is “payment gateway for SaaS”, reformulation queries could be:

- “SaaS billing platforms”

- “subscription payment solutions for software”

Comparative query: LLMs look for comparisons between options to give a more detailed answer.

Example:

- “Stripe vs PayPal for SaaS”

- “Braintree vs Chargebee pricing comparison”

Why it matters: Comparison queries have high commercial intent and signal purchase readiness.

Related query: These explore topics closely related to the main query to expand context.

Example:

- “Best invoicing software for SaaS companies”

- “Recurring billing automation tools”

Implicit query: Queries that the user might implicitly want to know but didn’t explicitly ask.

Example:

- “Payment gateway fees and hidden costs”

- “PCI compliance for SaaS payments”

Entity expansion: LLMs bring in related entities (brands, tools, or services) that might be relevant.

Example:

- Adding “Square,” “Recurly,” or “Zoho Subscriptions” when the user searches for SaaS payment gateways

Personalized query: Results tailored based on user preferences, location, or history.

Example:

- If a user previously looked at European SaaS companies, queries might include: “Best EU-friendly SaaS payment gateways”

- For a user in the US: “Top US-based payment processors for SaaS”

How is this different from the way traditional search works?

Traditional search engines like Google simply list link results in SERP for your specific query, whether it’s complex or simple.

AI search engines work differently: they instantly break your query into multiple sub-queries, search for each simultaneously, and synthesize everything into one comprehensive answer, all in seconds, invisible to the user.

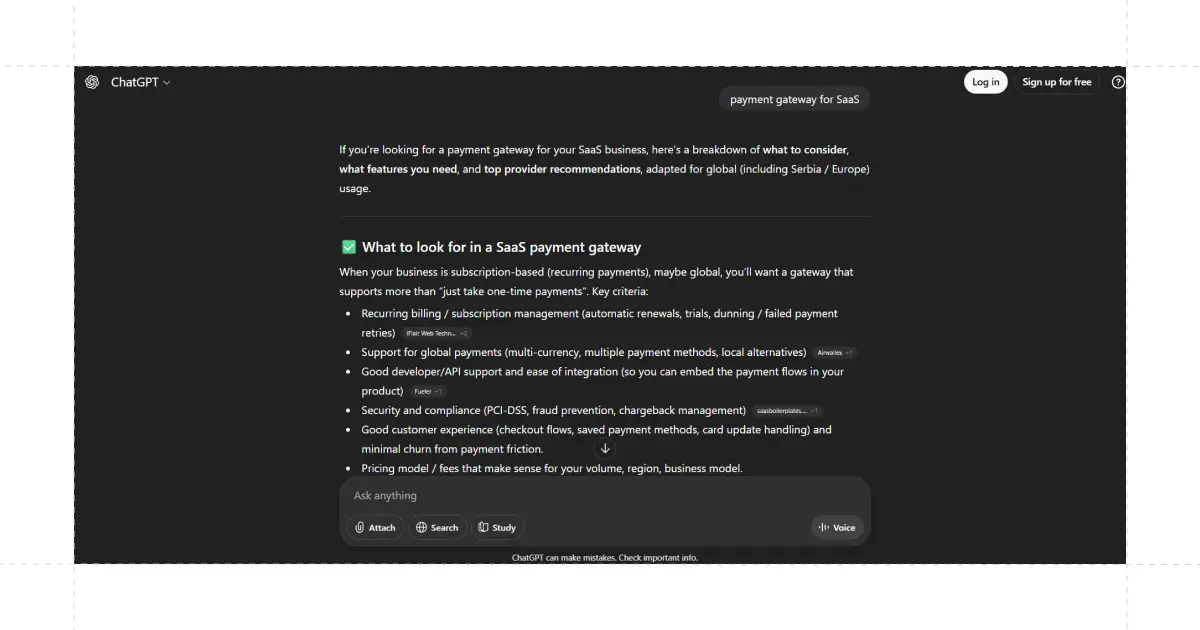

Let’s take the query example we already covered in the part above, “payment gateway for SaaS“.

Here is the comparison between the results for this query on ChatGPT and in Google SERPs:

ChatGPT:

- Provides a synthesized breakdown of key criteria to evaluate (recurring billing, multi-currency support, developer-friendly APIs, fraud protection, flexible pricing models, and local/regional presence)

- Highlights essential features that matter specifically for SaaS businesses (vs. one-off ecommerce)

- Includes top gateway and billing platform recommendations that work especially well for SaaS models

- Offers structured guidance on what to look for in a payment gateway for SaaS

- Presents information in an organized, actionable format with clear feature categories without needing to visit multiple sites

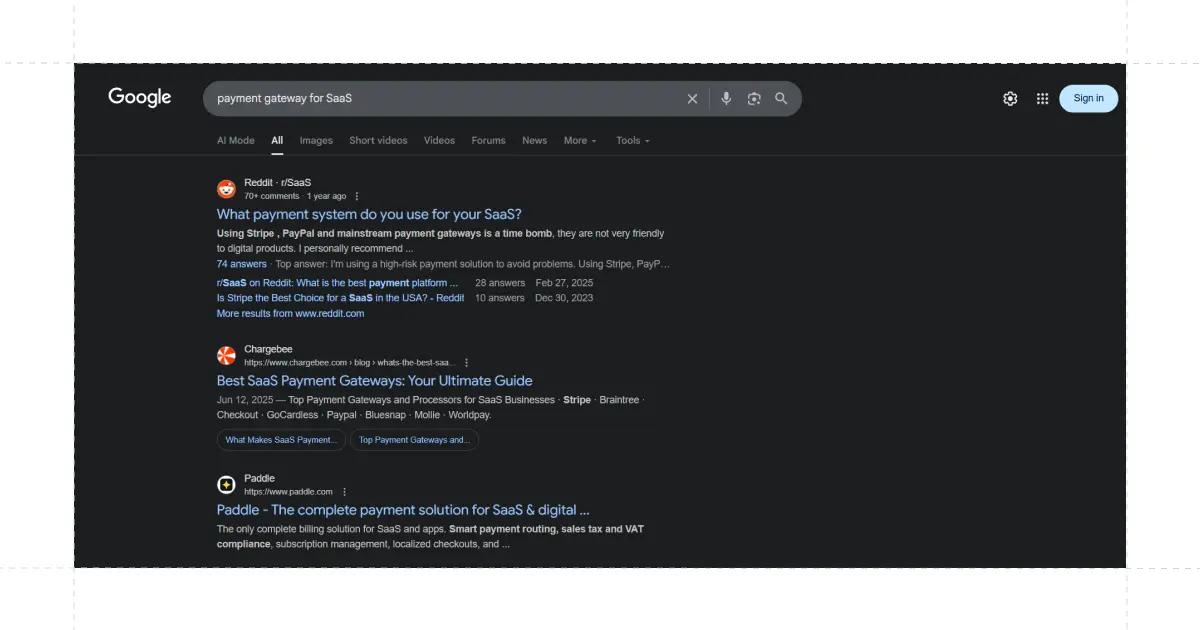

Google SERP:

- Shows mostly links to blogs, forums, and product pages (e.g., Reddit, Quora).

- Users must click through multiple pages to gather all relevant information.

- Information is fragmented and requires manual comparison.

What does query-fan-out mean for SEO?

Query fan-out, as presented by Google at Google I/O and implemented in Google’s AI Mode, is now a core principle for all AI search engines.

It’s not just an opinion anymore; it’s a fact that conversational search is taking a part of the search market, so understanding how those LLMs are working and giving results to the end-users is crucial for every SEO and marketer.

The query fan-out technique is one of those things (such as RAG, neuro reranking, chunking, etc.) that SEOs must understand and know how to optimize the website to appear in those results. This complexity has led to the emergence of specialized GEO (Generative Engine Optimization) agencies focused on AI search optimization.

Why does This Changes Everything?

Old Rule: Rank #1 for your target keyword = guaranteed visibility

New Rule: Ranking for the main keyword doesn’t guarantee appearance in AI results

- Main keyword rankings aren’t enough: For example, you might rank #4 for “best project management software.” Still, ChatGPT displays a passage from a competitor because they rank well for “Asana vs Trello for remote marketing teams”, a sub-query ChatGPT deemed more relevant.

- Authority must extend to sub-queries: Your content must be authoritative for dozens of related queries, not just the primary one.

- Zero-click searches are increasing: AI-synthesized answers mean users get their information without clicking through to your site, which makes every click more valuable.

- Content depth matters more than ever: Thin content that only targets one keyword won’t survive in query fan-out.

- ICP analysis becomes critical: Understanding your Ideal Customer Profile helps you identify which sub-queries actually matter to your audience.

While query fan-out presents challenges, it also creates opportunities:

- Long-tail dominance: Smaller sites can win by owning specific sub-queries

- Expertise rewarded: Deep, authoritative content gets cited in AI answers

- Comparison content wins: “X vs Y” pages are prime AI fodder

- Fresh content advantage: Recently updated content with “best” or “vs” parts in the Title Tags ranks higher in AI results

LLMs’ principles for Query-fan-out

To effectively leverage query-fan-out, it’s important to understand how LLMs approach sub-queries, explore sources, and personalize results for users.

1. Include “Best” and “Comparative” in Title Tag

To align with query-fan-out principles, your pages should include “best” and comparative terms in the Title Tag, meta descriptions, headings, and content. This helps LLMs recognize your page as relevant for users searching for comparisons or top options.

Example optimization:

❌ Generic: “Payment Gateways for Software Companies”

✅ Optimized: “Best Payment Gateways for SaaS To Choose in 2025”

Note: Make sure that your Title Tag does not go over 60 characters; the optimal number is 55.

2. Transparency in Source Exploration

Perplexity openly shows the subqueries it uses and the sources it draws on, helping users understand how answers are formed.

(perplexity queries – image)

Why this matters: You can see exactly which sub-queries Perplexity considers relevant, eliminating guesswork in your content strategy.

Example: Searching “payment gateway for SaaS” reveals Perplexity searches for:

- “best payment gateways for SaaS 2025”

- “how to choose a payment gateway for SaaS”

- “payment gateway integration for subscription software”

Action: Use Perplexity to reverse-engineer which sub-queries you should cover.

3. Similarity to Autosuggest Keywords

Query fan-out functions similarly to autosuggest, exploring multiple variations of a query to cover the user’s intent comprehensively.

Strategic insight: Your autosuggest keywords are a preview of AI sub-queries. Incorporate these variations into your content.

4. Personalization of Results

Google and other LLMs automatically personalize search results based on:

- User search history

- Geographic location

- Device type

- Previous interactions

- Industry context

Key takeaway: The same query yields different results for different users. Optimize for diverse sub-queries to capture varied user segments.

How to Optimize Your Content for Query Fan-Out?

To succeed in a query-fan-out world, publishers must think beyond the primary keyword and create content that satisfies multiple sub-queries and user intents.

Here’s how:

1. Expand Keyword Research

Why: Query fan-out makes static keyword research obsolete. More importantly, LLMs don’t just look at individual keywords; they evaluate whether your site covers the entire topic cluster.

The Cluster Approach: Instead of targeting isolated keywords, map out complete topic clusters consisting of:

- Pillar content: Your main comprehensive guide

- Sub-topic pages: Comparisons, use cases, and specific aspects

- Supporting content: FAQs, case studies, and related resources

Action steps:

- Map your primary topic into a complete cluster structure

- Identify all sub-queries within the cluster (reformulation, comparative, related, implicit)

- Create interlocking content that references other pieces in the cluster

- Use tools like Semrush, Ahrefs, AnswerThePublic, AlsoAsked, and Perplexity to discover sub-queries

Example Cluster for “payment gateway for SaaS”:

Pillar: “Complete Guide to Payment Gateways for SaaS Companies”

.webp.webp)

Sub-topics:

- “Stripe vs Braintree 2025: Which is Best for SaaS?”

- “Payment Gateway Fees Comparison for Subscription Businesses”

- “SaaS Recurring Billing Platforms: Top 10 Options”

Supporting:

- “PCI Compliance Requirements for SaaS Companies”

- “Handling Failed Payments in Subscription Businesses”

Pro tip: LLMs pull answers from sites that demonstrate topical authority across an entire cluster. Cover the whole cluster, and you’ll appear more frequently in AI-generated answers.

2. Create Comparison Pages

Why: LLMs favor content that answers comparative queries.

Action: Build pages or sections specifically comparing top tools, products, or services. Include tables, pros/cons, pricing, and features.

Example: “Asana vs Trello vs Monday.com for remote marketing teams.”

3. Optimize Title Tags and Meta Descriptions with “Best” and Comparative Terms

Why: Titles and metadata guide LLMs in understanding relevance for “best” and comparative intent.

Action: Incorporate terms like “best,” “top,” “vs,” or “comparison” in strategic places:

- Page title

- H1/H2 headings

- Meta description

Examples:

✅ List content: “10 Best Payment Gateways for SaaS in 2025 | Pricing & Features Compared”

✅ Comparison content: “Stripe vs Braintree vs PayPal: Best Payment Gateway for SaaS (2025 Guide)”

✅ How-to content: “How to Choose a Payment Gateway for SaaS | Complete 2025 Guide”

4. Use Listing or “Round-Up” Articles

Why: Lists cover multiple sub-queries in a single content piece.

Action: Create content that highlights several products, services, or solutions, and include detailed features for each.

Example: “Top 10 Payment Gateways for SaaS Companies in 2025.”

5. Regularly Refresh Content

Why: LLMs value up-to-date and authoritative content for sub-queries.

Action: Update information, pricing, features, or comparisons regularly. Ensure all facts are up to date and accurate.

Example: Recheck pricing and feature updates for “Stripe vs Braintree” every 6–12 months.

6. Cover the Full Range of Sub-Queries

Why: Appearing for the main keyword isn’t enough; LLMs may surface content for sub-queries instead.

Action: Incorporate content that addresses reformulation, comparative, related, implicit, entity expansion, and personalized queries.

Example: Include FAQs like “What is the cheapest SaaS payment gateway?” or “EU-friendly subscription payment platforms.”

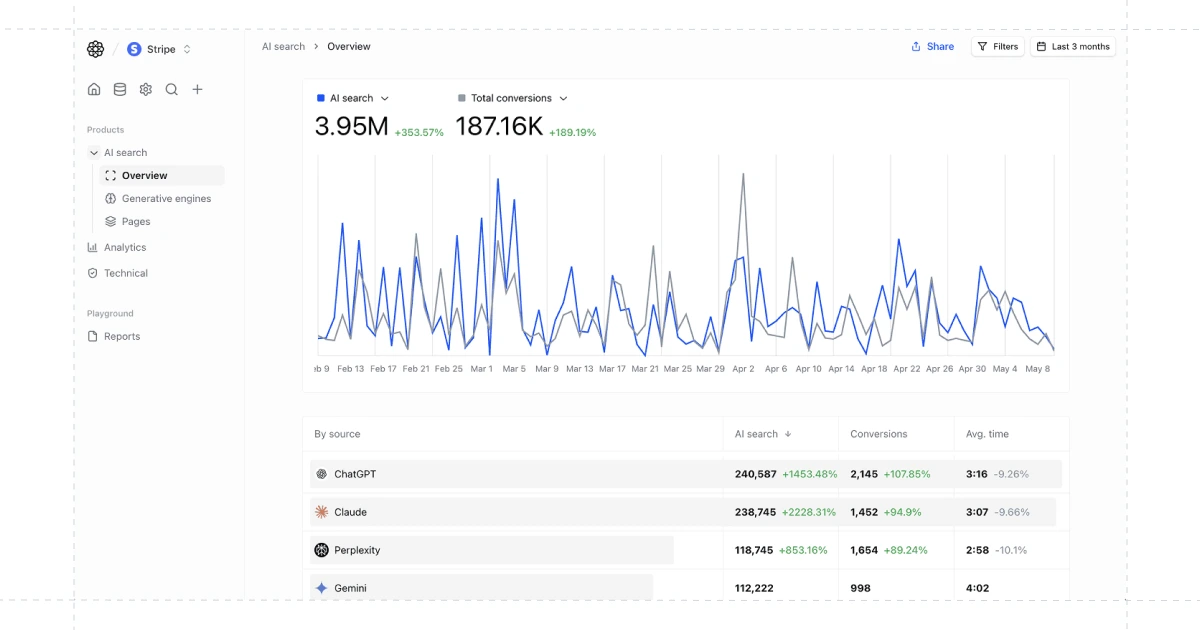

How to track AI Visibility?

Once you’ve optimized your content for query fan-out, the next critical step is measuring your performance across AI search platforms.

You need specialized tracking methods because traditional analytics tools like Google Search Console won’t show you how often your content appears in ChatGPT, Perplexity, or Google’s AI Mode.

Why AI Search Tracking Matters

Many websites already receive traffic from AI platforms without realizing it. Users discover content through ChatGPT or Perplexity, then visit the site directly, making this traffic invisible in standard analytics.

Proper tracking reveals:

- Which AI platforms drive the most qualified traffic

- The exact prompts that surface your brand in AI results

- How does your visibility compare to competitors across different LLMs

- Which content formats and topics perform best in AI citations

Two Main AI Visibility Tracking Options

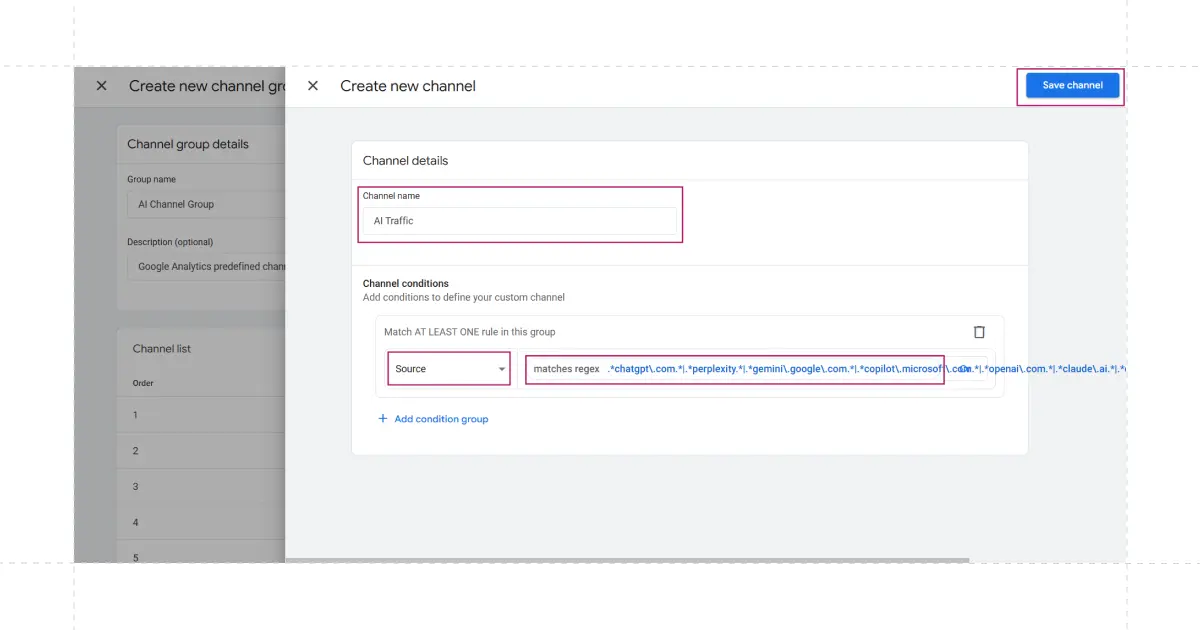

1. Google Analytics 4 Setup (Free)

The most accessible method is configuring Google Analytics 4 to identify AI traffic sources.

By creating a custom channel group with regex patterns for AI platforms (ChatGPT, Perplexity, Claude, Gemini, etc.), you can segment and analyze this traffic alongside traditional sources.

This approach requires some technical setup but provides basic visibility into AI-driven visits.

2. Specialized AI Analytics Tools (Free & Paid)

For comprehensive tracking, AI search monitoring tools such as Ahrefs, Semrush, or AtomicAGI offer dedicated AI search analytics.

These tools provide:

- Real-time visibility tracking across multiple AI platforms

- Prompt monitoring to see the exact user queries surfacing your content

- Competitor analysis showing who dominates specific AI results

- AI-specific metrics beyond standard web analytics

Tracking AI visibility isn’t just about vanity metrics; it’s about understanding which optimization strategies actually work.

By monitoring your performance across query fan-out results through query fan-out coverage analysis, you can:

- Identify which sub-queries drive the most valuable traffic

- Discover content gaps where competitors dominate

- Optimize your topic clusters based on real AI citation data

- Allocate resources to the most effective AI platforms for your niche

As AI search continues to grow, early tracking adoption gives you a competitive advantage.

Key Takeaways

Here are the seven essential principles you need to remember when optimizing for query fan-out and AI search:

- Query fan-out is the new search paradigm: AI engines break down queries into multiple sub-queries to provide comprehensive answers.

- Main keyword rankings are no longer sufficient: You must rank for dozens of related sub-queries to capture AI search traffic.

- Content comprehensiveness wins: Deep, authoritative content covering multiple angles of a topic performs best.

- Comparison content is king: “X vs Y” pages are prime candidates for AI citations.

- Freshness matters more: Regular content updates signal authority and relevance to LLMs.

- Personalization is automatic: The same query yields different results for different users based on context.

- Think like an LLM: Anticipate related questions, implicit queries, and entity expansions when creating content.

Conclusion

Query fan-out represents a fundamental shift in how search engines understand and respond to user queries. For SEO professionals and content creators, this means moving beyond single-keyword optimization to creating comprehensive, authoritative content that addresses the full spectrum of user intent.

The publishers who will thrive in this new era are those who:

- Create genuinely comprehensive content

- Maintain fresh, up-to-date information

- Build authority across sub-queries

- Optimize for comparison and “best” queries

- Think holistically about user intent

The question is no longer: “Does my page rank for this keyword?“

The question is now: “Does my content answer all the questions my audience has about this topic?“

Answer that question well, and you’ll succeed in the age of query fan-out.

If you are looking for a professional approach and real results, Omnius is here to help! As a GEO agency, we specialize in helping businesses get mentions within LLM platforms.

Book a free 30-minute call and discover how we can help you get mentions within LLM platforms with personalized GEO strategies!

FAQs

What’s the difference between Query-fan-out and RAG?

Query fan-out breaks a single search into multiple subqueries to cover user intent comprehensively. RAG retrieves and processes external content for each sub-query, accessing live web data to generate answers.

What is the purpose of a query?

A query initiates the search process, where both traditional and AI search engines identify user intent. In AI search, a single query triggers query fan-out, spawning multiple sub-queries to deliver comprehensive, personalized answers.

Keep Learning

Nguồn: omnius.so